TAIPEI, June 3 – Global technology giant Nvidia Corporation revealed its latest roadmap for new semiconductors that will arrive on a ‘one-year rhythm’.

It revealed, for the first time, the Rubin platform slated for a 2026 release, which will succeed its recently launched Blackwell platform.

Founder and chief executive officer Jensen Huang noted that the company’s basic philosophy is to build the entire data centre scale, disaggregate and sell parts on a one-year rhythm, and push everything to technology limits.

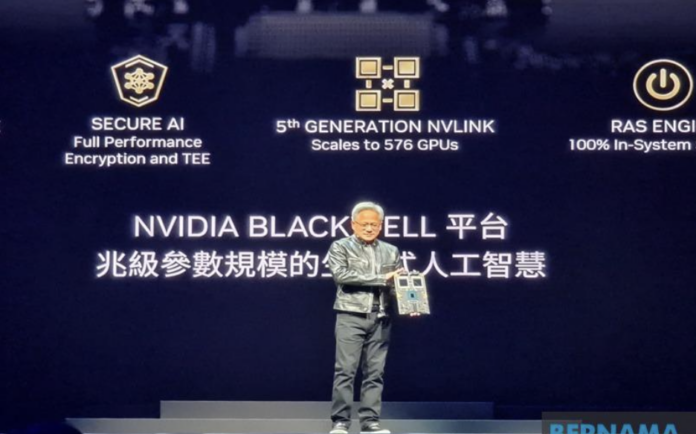

This came after Jensen unveiled an array of Nvidia Blackwell architecture-powered systems featuring Grace central processing units (CPUs), Nvidia networking and infrastructure for enterprises to build artificial intelligence (AI) factories and data centres to drive the next wave of generative AI breakthroughs.

“The next industrial revolution has begun. Companies and countries are partnering with Nvidia to shift the trillion-dollar traditional data centres to accelerated computing and build a new type of data centre — AI factories — to produce a new commodity: artificial intelligence.

“From server, networking and infrastructure manufacturers to software developers, the whole industry is gearing up for Blackwell to accelerate AI-powered innovation for every field,” he said during his keynote address ahead of the Computex 2024 on Sunday.

According to Jensen, Nvidia’s comprehensive partner ecosystem includes Taiwan Semiconductor Manufacturing Company (TSMC), the world’s leading semiconductor manufacturer and an Nvidia foundry partner, as well as global electronics makers, which provide key components to create AI factories.

These include manufacturing innovations such as server racks, power delivery, cooling solutions and more, he said.

“As a result, new data centre infrastructure can quickly be developed and deployed to meet the needs of the world’s enterprises and further accelerated by Blackwell technology, Nvidia Quantum-2 or Quantum-X800 InfiniBand networking, Nvidia Spectrum-X Ethernet networking and Nvidia BlueField-3 data processing units (DPUs).

“Enterprises can also access the Nvidia AI Enterprise software platform, which includes Nvidia NIM inference microservices, to create and run production-grade generative AI applications,” Huang added.

In March, YTL Power International announced the formation of the YTL AI Cloud data centre in Johor, a specialised provider of massive-scale graphics processing unit (GPU)-based accelerated computing, and the deployment of Nvidia Grace Blackwell-powered DGX Cloud, an AI supercomputer for accelerating the development of generative AI.